In January 2017 I joined the Daydream team at Google. Daydream was dedicated to exploring and creating experiences & apps in the virtual and augmented reality spaces. During my time there I worked (full-time) as an [AR/VR] UX Engineer on the WebXR team. The AR experience in the video above (from Engadget) highlights my biggest accomplishment while working at Google — an interactive educational (web-based) augmented reality experience that allows users to view and learn about an ancient Aztec sculpture called a Chacmool, you can read more about it here: https://developers.google.com/web/updates/2018/06/webar-chacmool

At Daydream I helped shape the future of the WebXR API, made numerous web based AR prototypes, created & open sourced WebAR examples on Google’s behalf, gave talks at SIGGRAPH Asia and FITC Toronto about the state of WebXR API and showed examples of what was possible with the experimental API.

Below are specific projects and prototypes that I worked on along side Josh Carpenter (our ambitious & visionary leader), Iker Jamardo Zugaza (our tech lead and motivator), Jordan Santell (a hardcore (web) ux / software engineer), Marley Rafson (a young and motivated software engineer), and Max Rebuschatis (a badass Google veteran and software engineer from the games industry). We accomplished a lot as a team, but this post will specifically highlight my contributions and explorations.

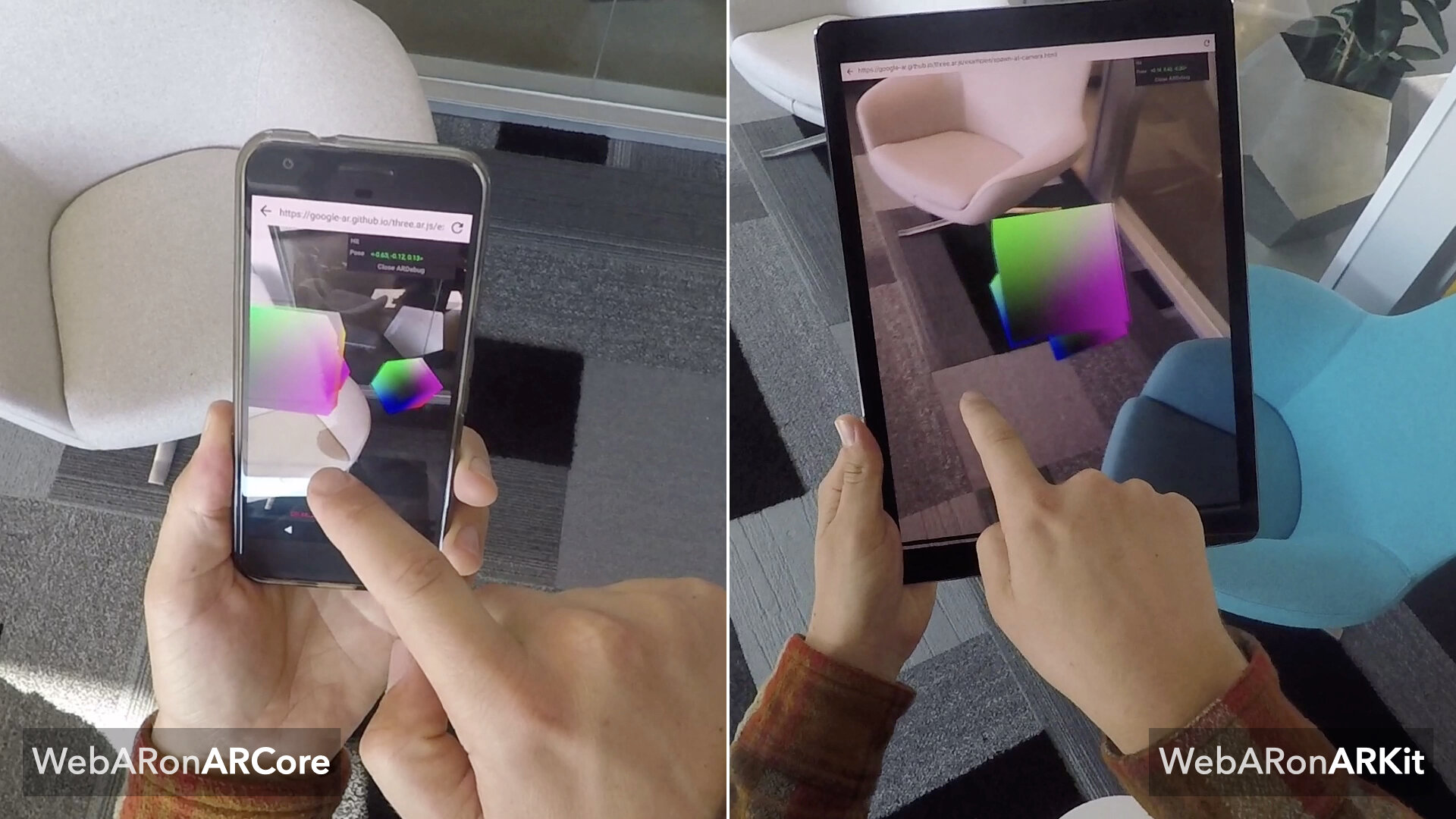

One of the goals of the WebXR team was to see if we could leverage current web technologies to build the AR metaverse on the web. In order to build the AR metaverse on the web, we needed to access ARKit’s and ARCore’s API via javascript. Lucky for us, Iker Jamardo's earlier work exposed AR features in Chromium on top of the Tango AR platform, the team (mainly Iker) created two experimental browsers mobile apps (iOS, Android) that allowed access to the camera image frame and the device’s native AR API. I helped heavily with the iOS browser, WebARonARKit (see below). I designed the app’s interface in addition to helping to expose the ARKit API using a WKWebView and WKUserScripts (see specific commits here).

These experimental browsers helped the WebXR team prototype AR experiences that helped to shape the WebXR API and understand the challenges involved in using the web as the fundamental building block of the future of the AR metaverse and what features developers would need to make their AR experiences. Below is a montage of AR prototypes that the worked on. I worked on AR Graffiti (which was a joint effort between myself and Max Rebuschatis) and the AR Screen Space UI Controls, which explored screen space interface controls.

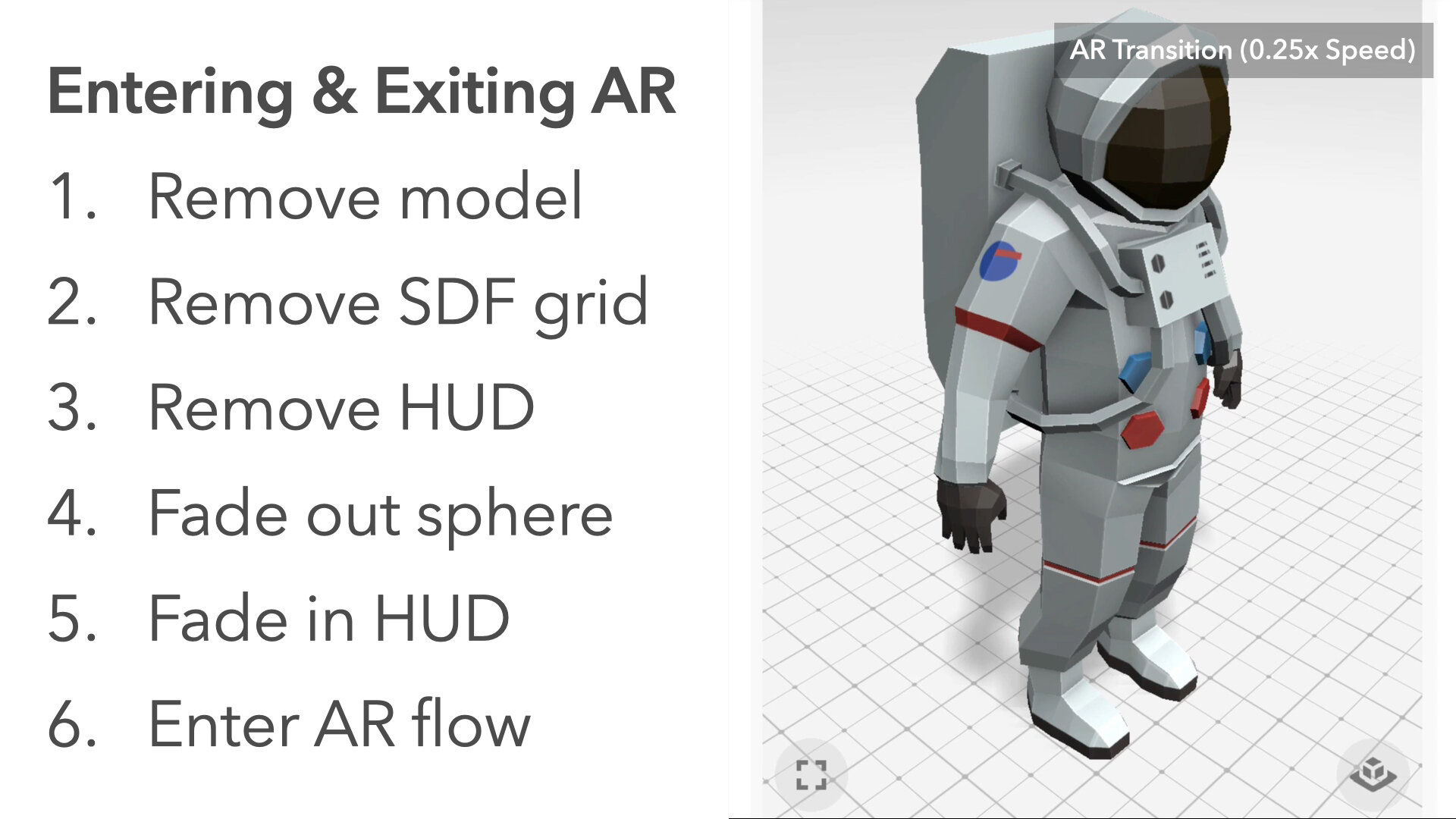

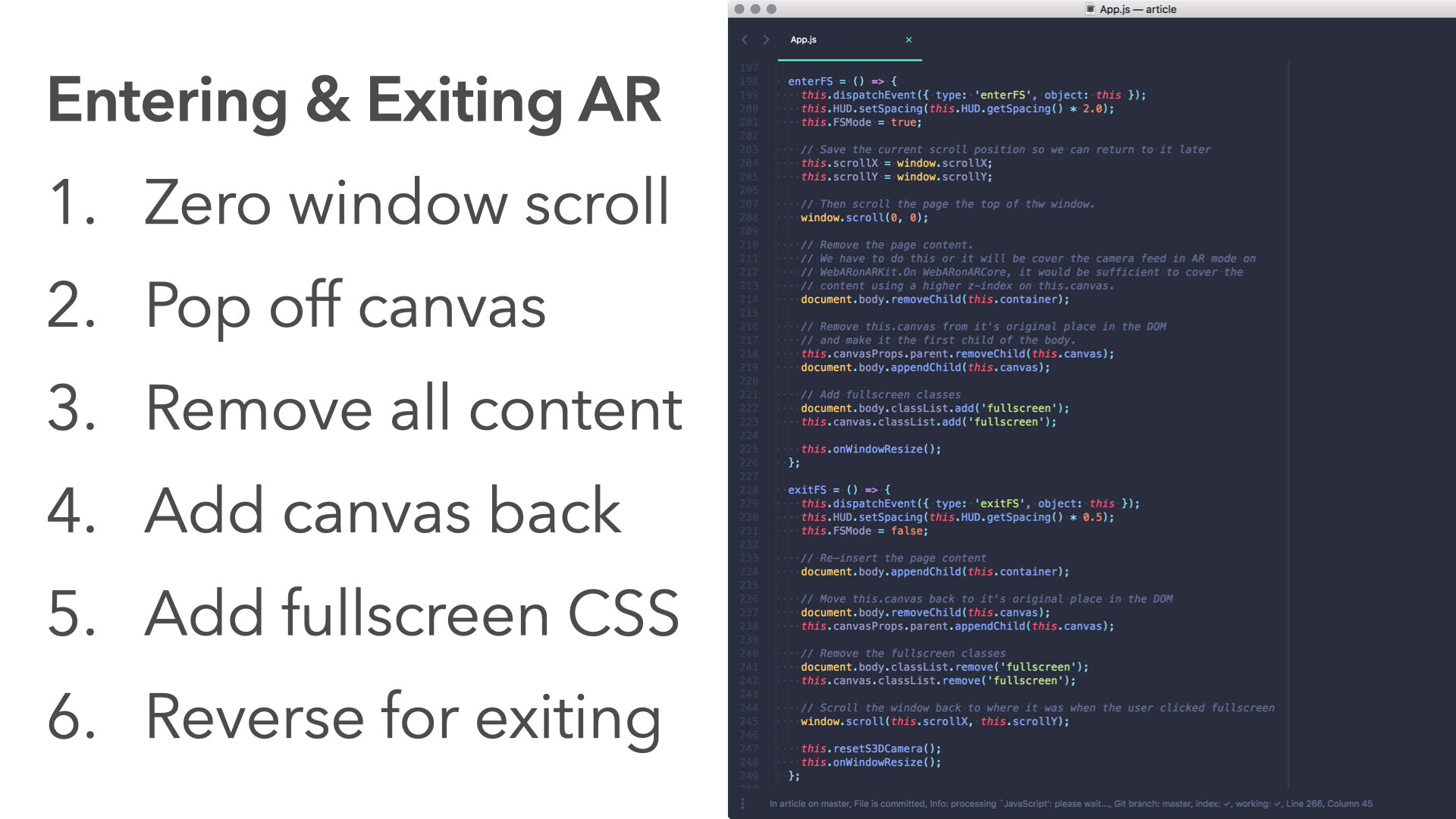

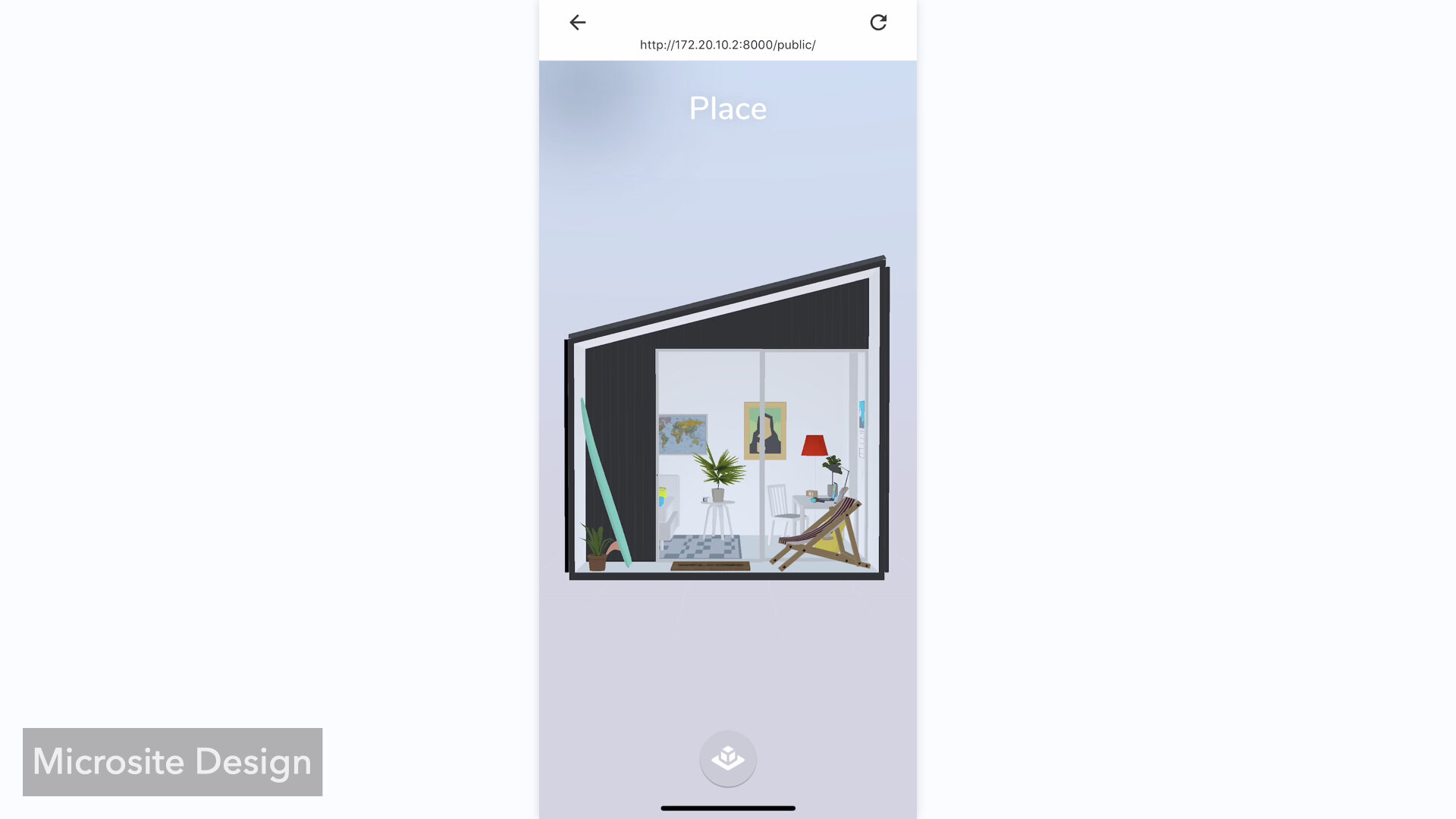

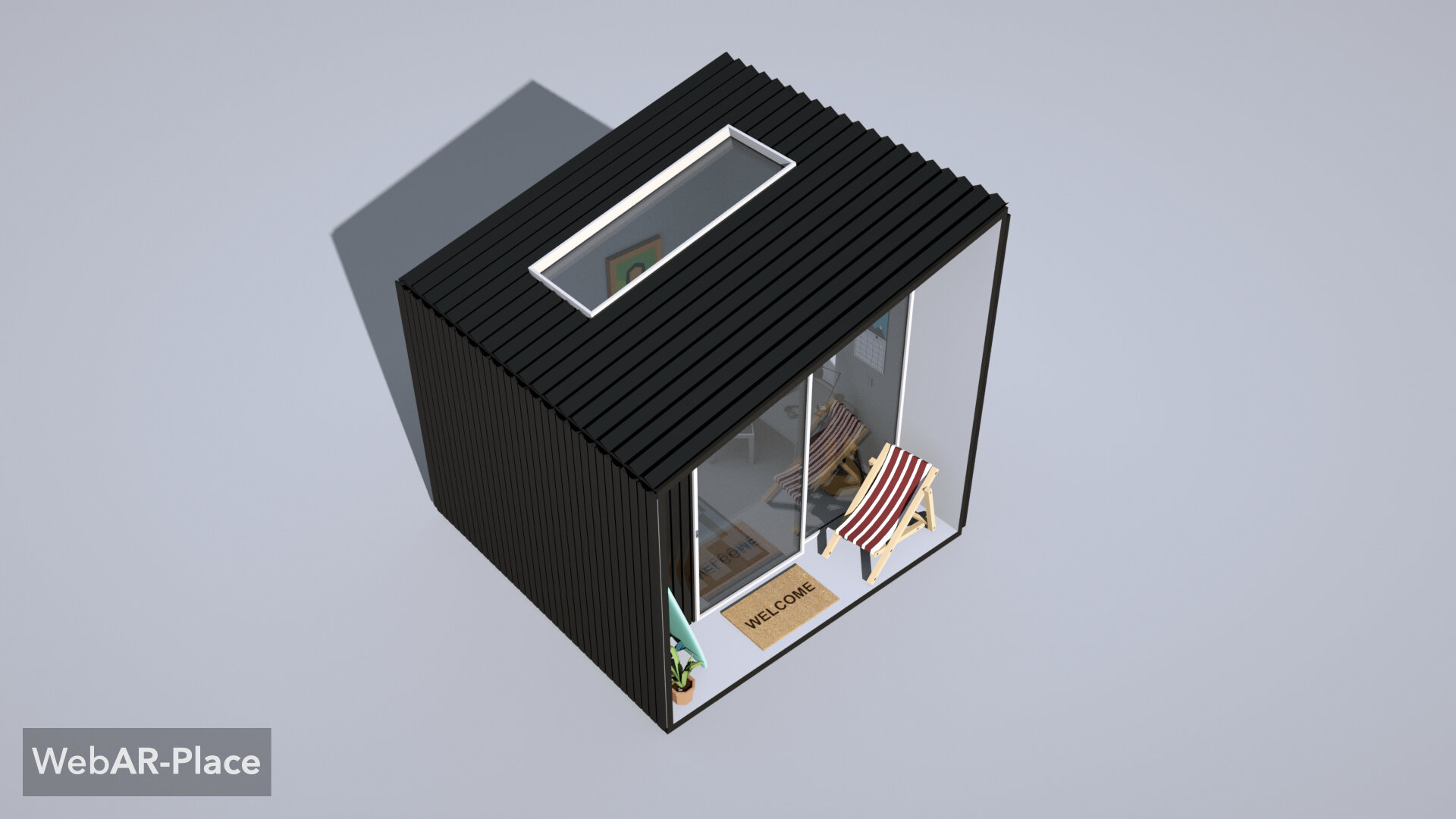

In addition to the explorations above, I meticulously designed and coded the visual transition from a normal web page to its augmented reality experience. This involved a lot of “DOM” manipulation via Javascript and creating animations to make the visual transition as smooth and intuitive feeling as possible. This is seen in the demos below when the models shrink down and then grow back to size when the screen is tapped to place the model.

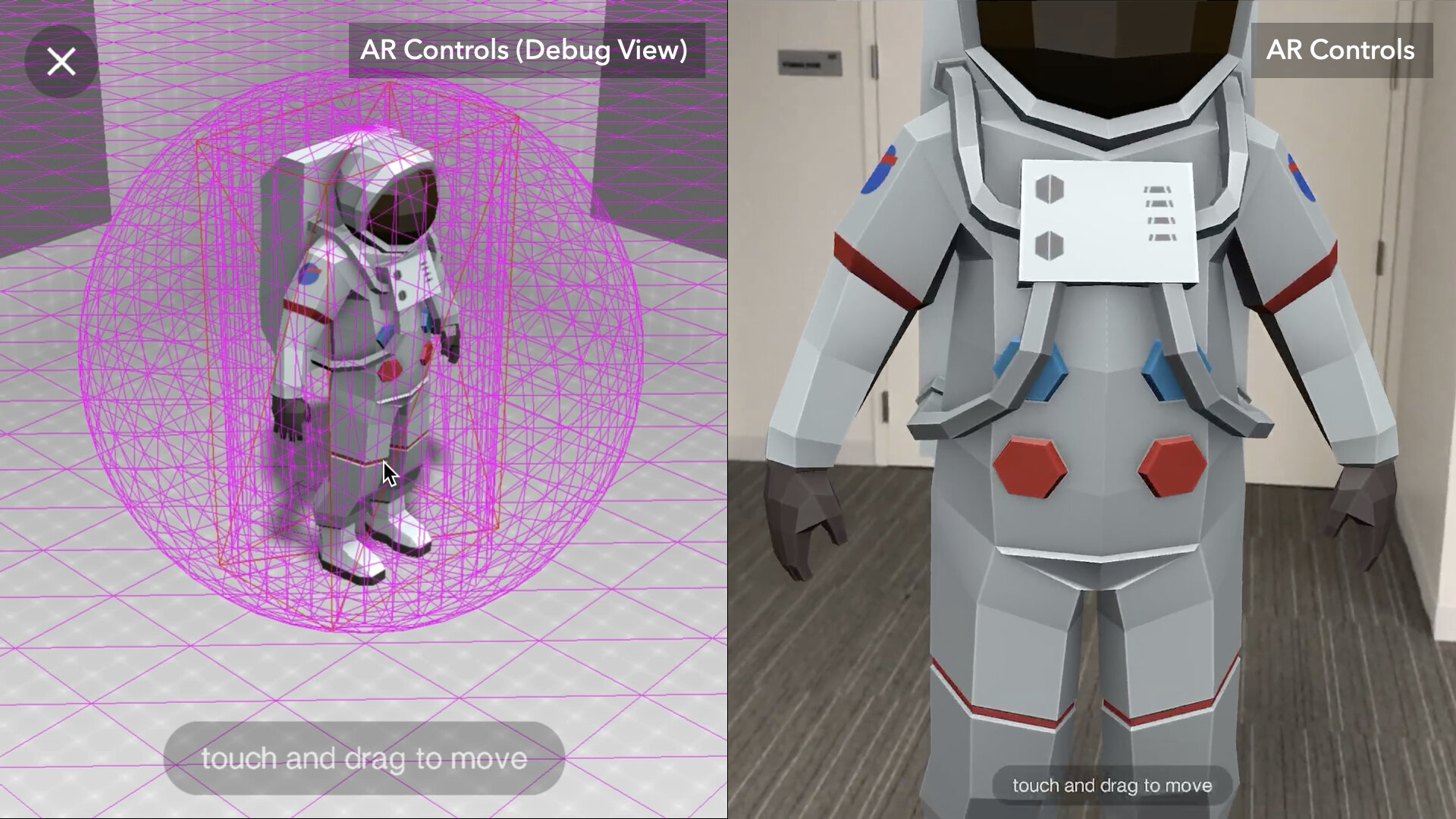

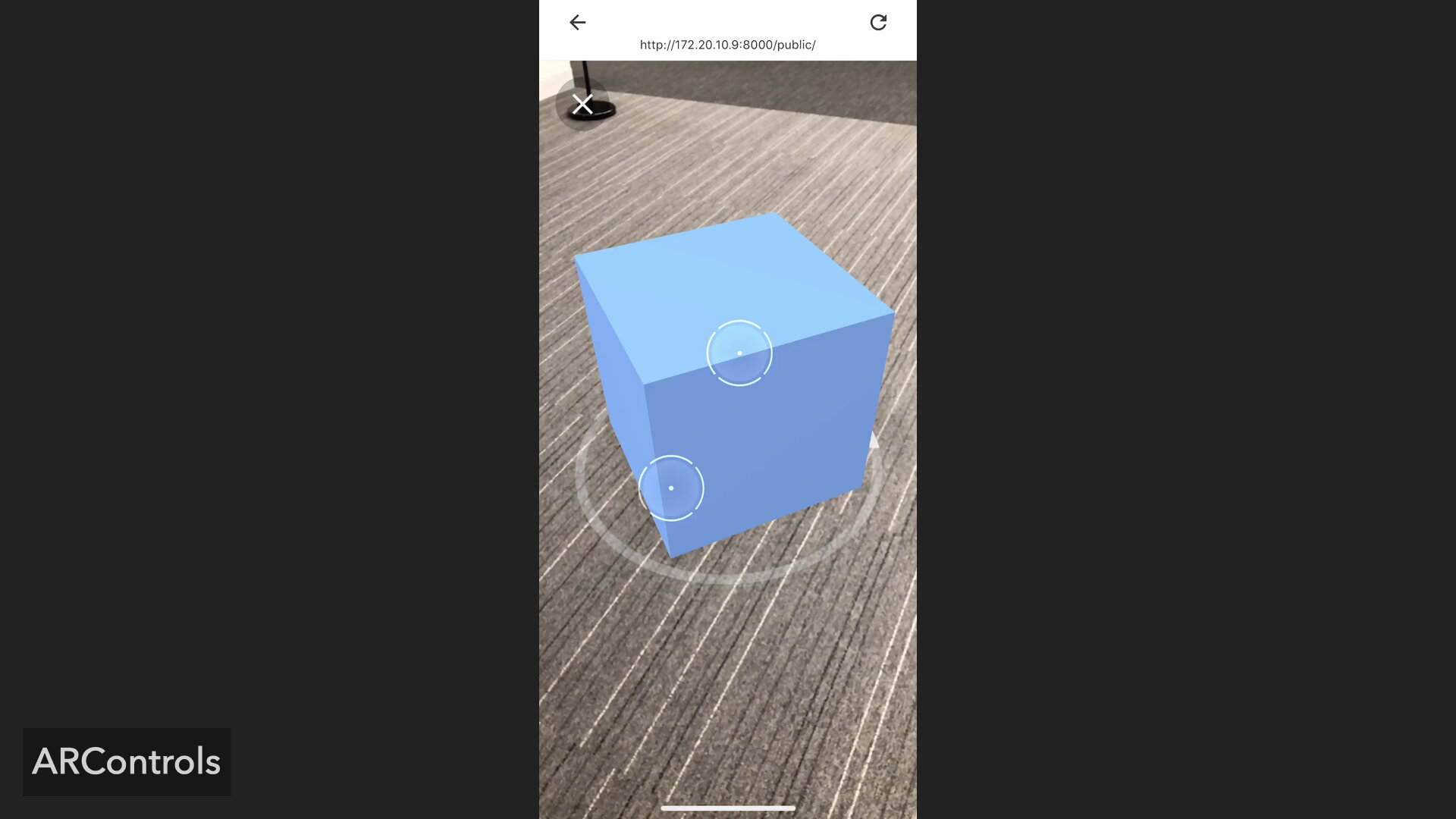

Moreover, I designed and coded reusable natural, intuitive and fluid controls for manipulating an object while placed in AR (via touches on screen, see AR Controls below). This allowed the team to easily manipulate an object’s size, position and orientation while in AR. To show others how these controls worked, I designed and coded visual (screen space) touch indicators to show the user’s touches on screen.

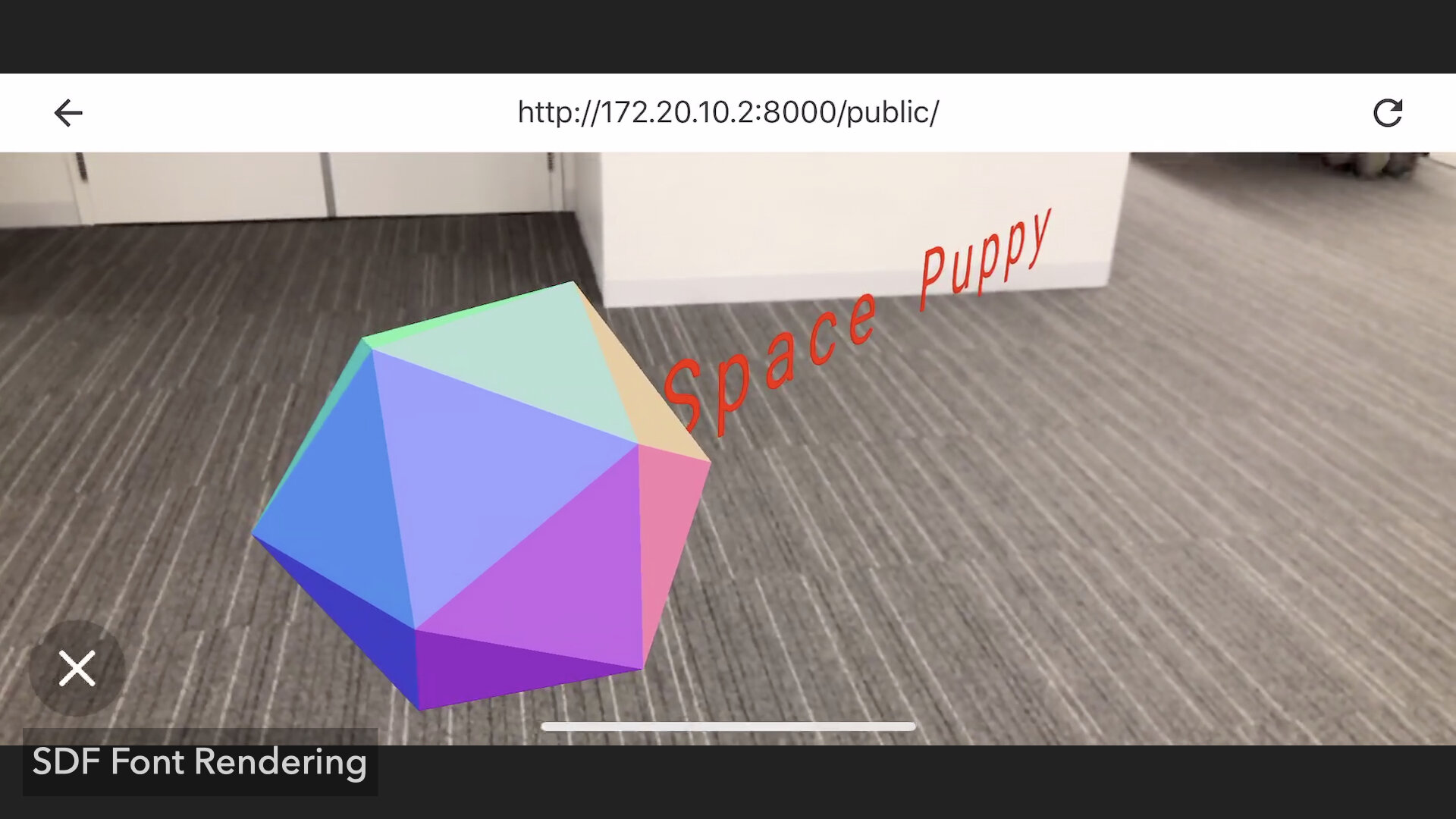

While prototyping these AR demos I designed and coded a re-usable diegetic visual indicator that showed the model’s orientation and 2D bounds. Furthermore, I worked on getting crisp and smooth text rendering working (via SDF font rendering techniques) and then explored proximity based text annotations & animations (when viewing content in AR, text is only visible when the distance to the text is appropriate and when the user is in the proximity of the model and facing the model head on).

While working on various AR prototypes, we encountered a problem when attempting to share these web experiences. The team wanted to share all these AR demos, but most people wouldn’t be compiling and running the experimental browsers on their phones, so they would have no way of actually experiencing the demos. Therefore we had to record these experiences.

Screen recordings were okay, but they didn’t show how users would actually interact with the content or how the AR experience would look like to users. So I took a weekend and leverage my mechanical engineering / maker knowledge and designed and fabricated an arm rig that would securely hold a phone (hands free of course) to record the experience happening on the demo phone. The result is shown below in this performance test.

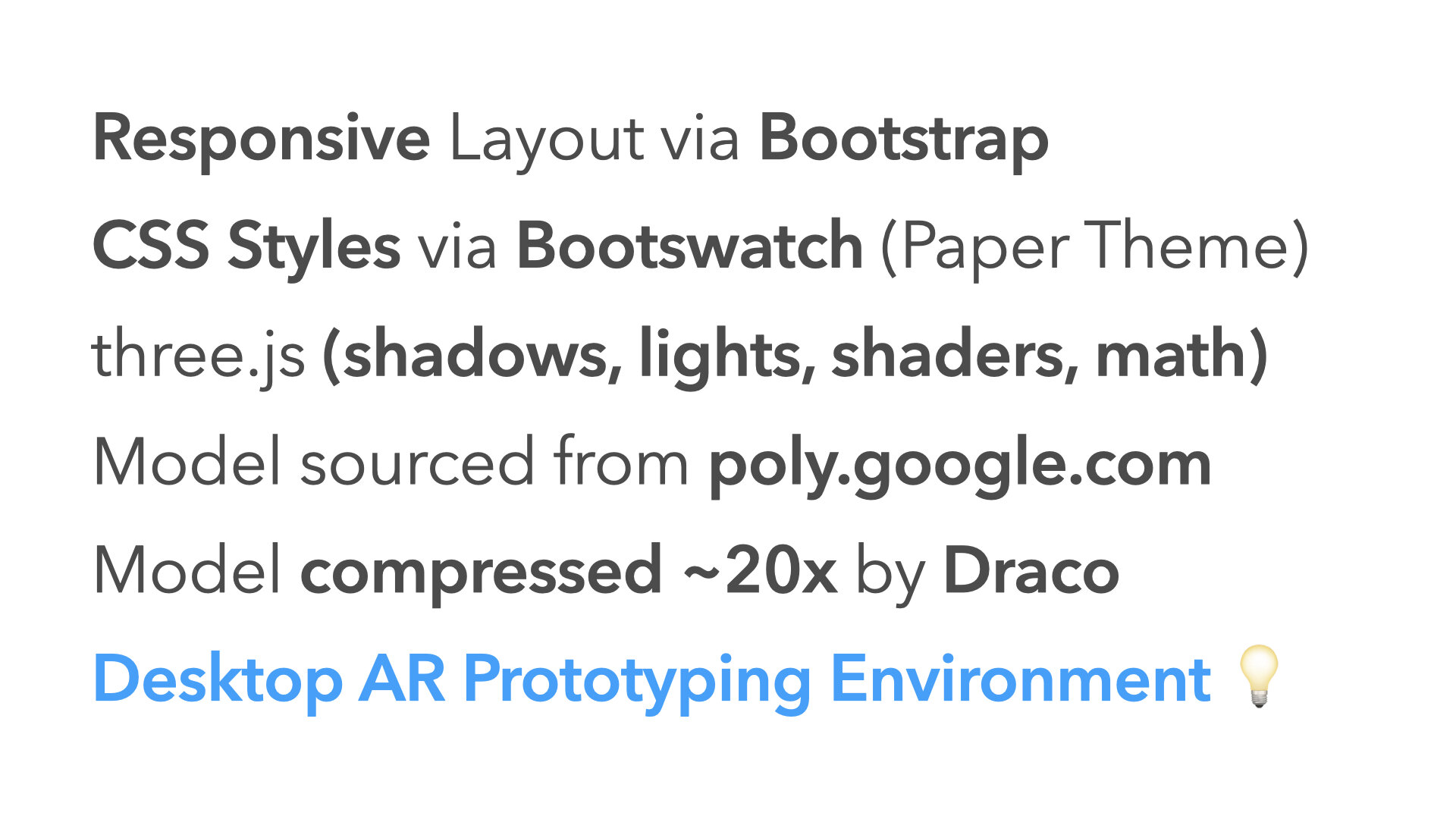

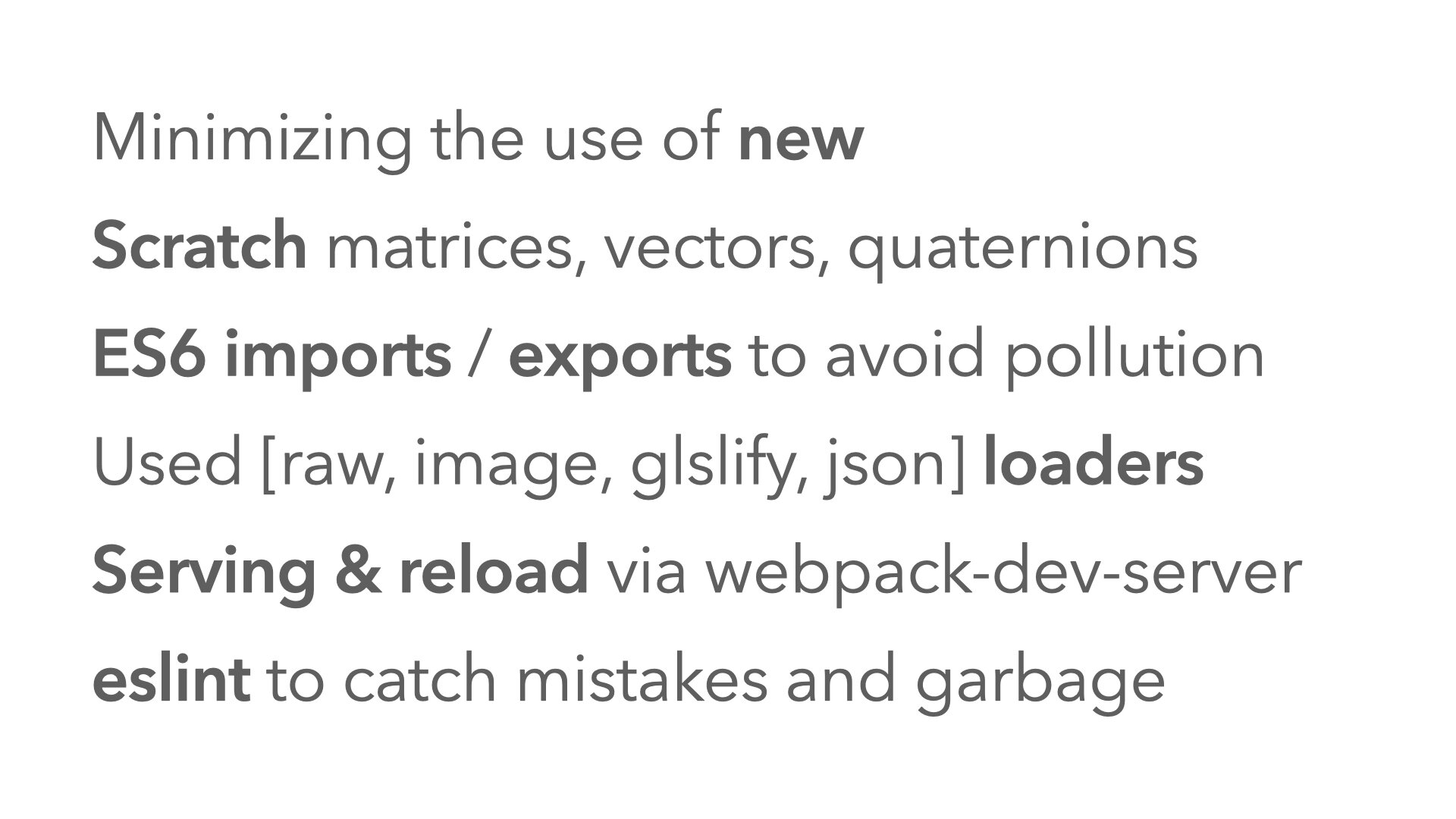

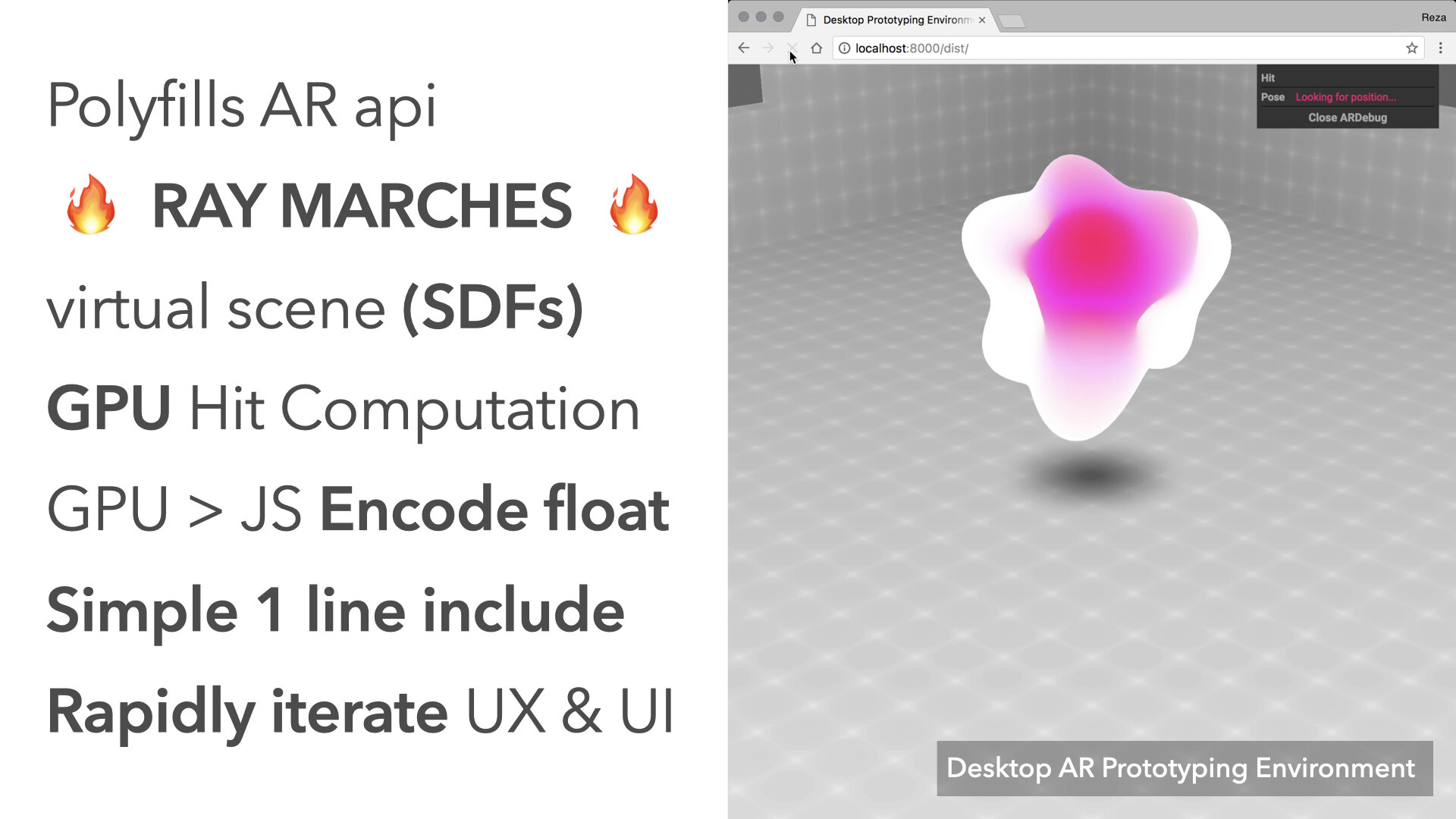

Another challenge we ran into while prototyping was the turn around cycle time for AR development. Luckily we were able to serve the site from our laptops and refresh the page on device when we made a code change, but the device still needed time to initialize the underlying AR session and establish 6 DOF tracking and plane detection for hit testing. So I designed and coded a virtual environment using signed distance fields (via GLSL shaders & WebGL) and javascript magic to polyfill the WebAR API so we could quickly design visuals and prototype interaction on desktop and then test on device when needed. This accelerated our design process and allowed us to move quickly and explore more possibilities. The video below shows how the AR Screen Space UI Controls prototype in this environment.

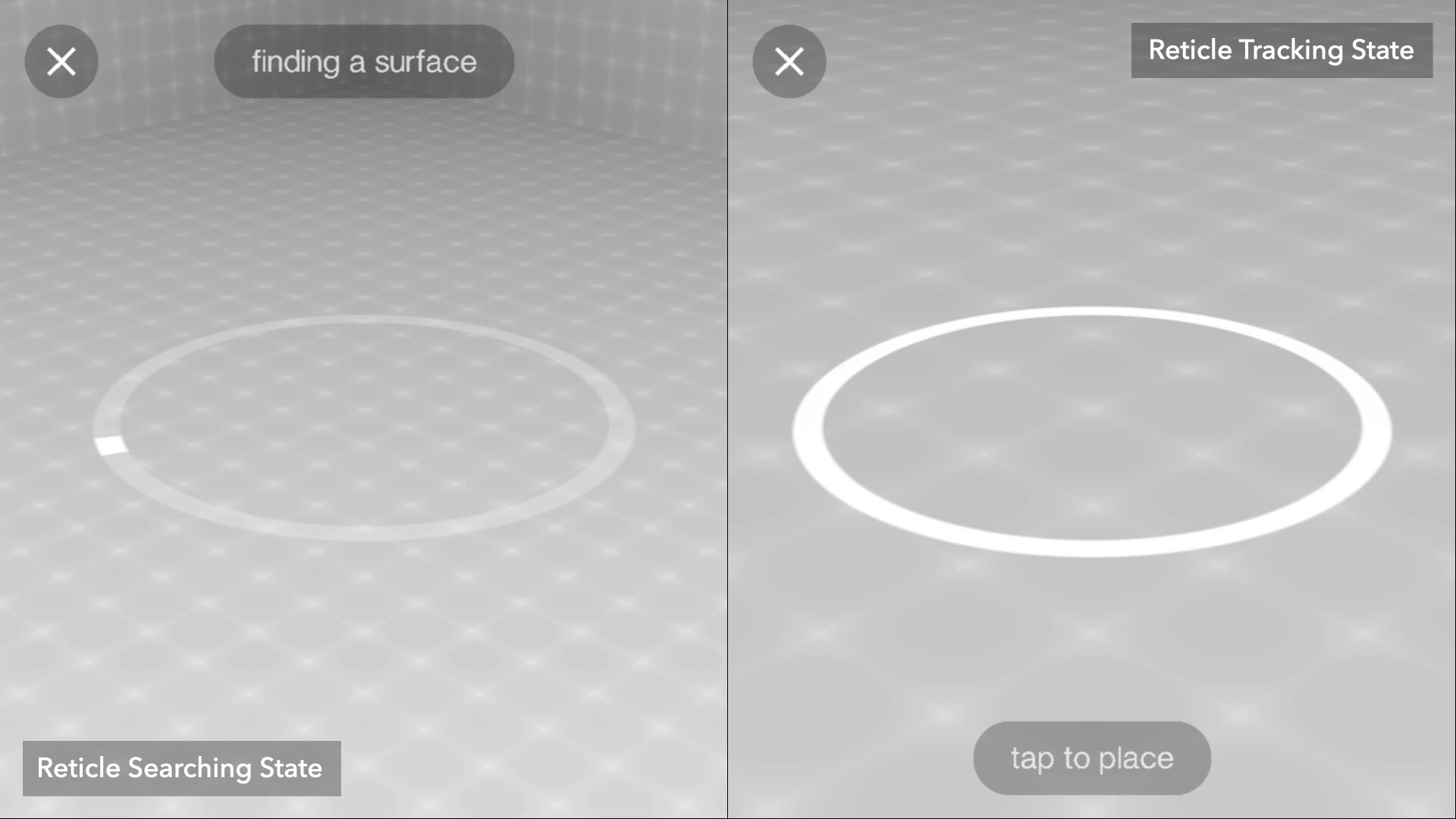

Using this virtual desktop prototyping environment, I was able to quickly iteration on the design of an intelligent reticle that visually communicates to the user the current state of the AR session and hit test API. When the underlying AR system has not established tracking or registered any planes (which are needed to get a successful hit test result) the reticle is in a searching state visualized by a loading indicator like state (first video below). After establishing tracking and detecting a plane the reticle’s progress indicator transforms into a full circular arc and pulsates (second video below). When the user taps the screen to place a model, the reticle expands all the while fading away (third video below).

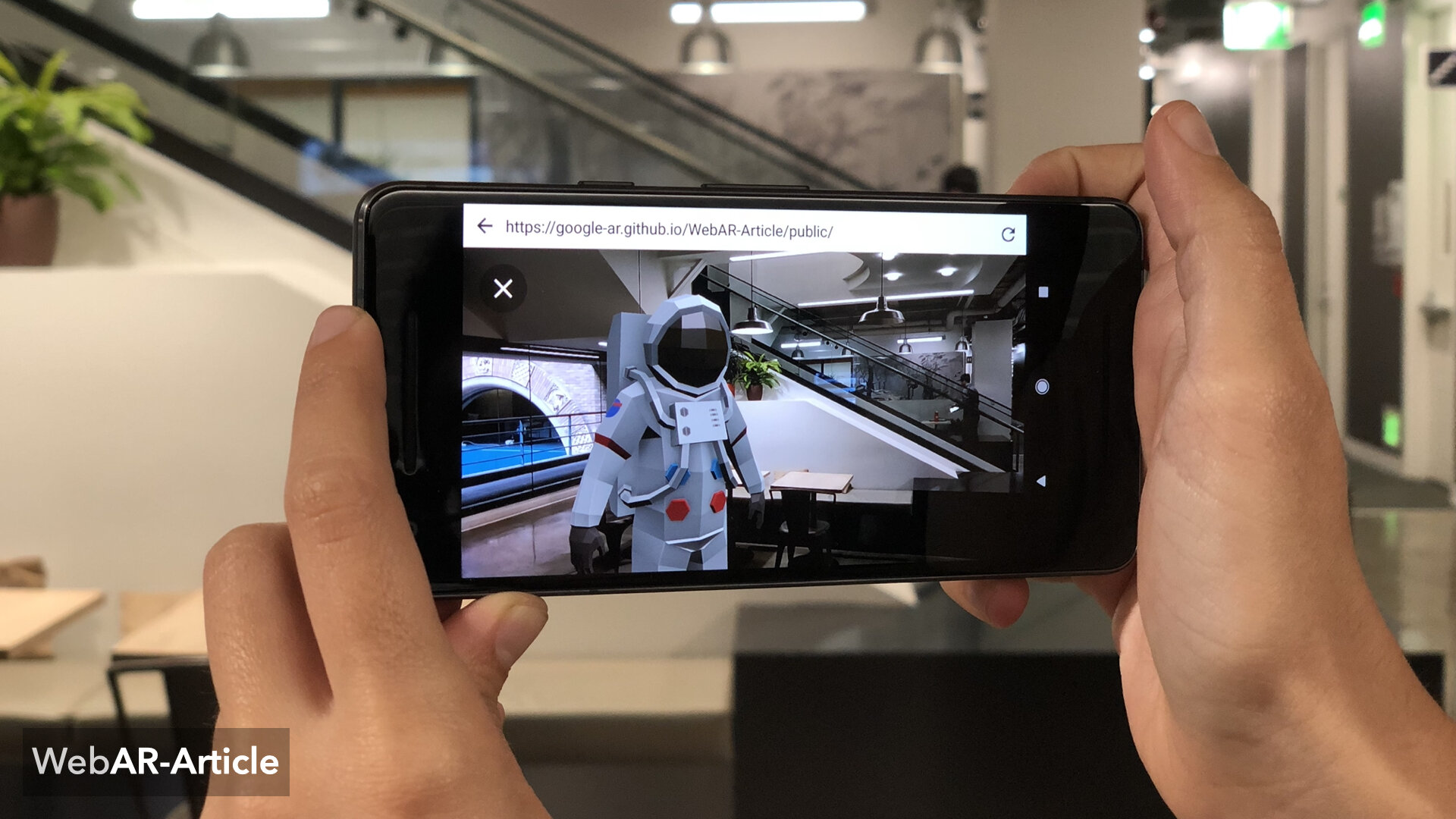

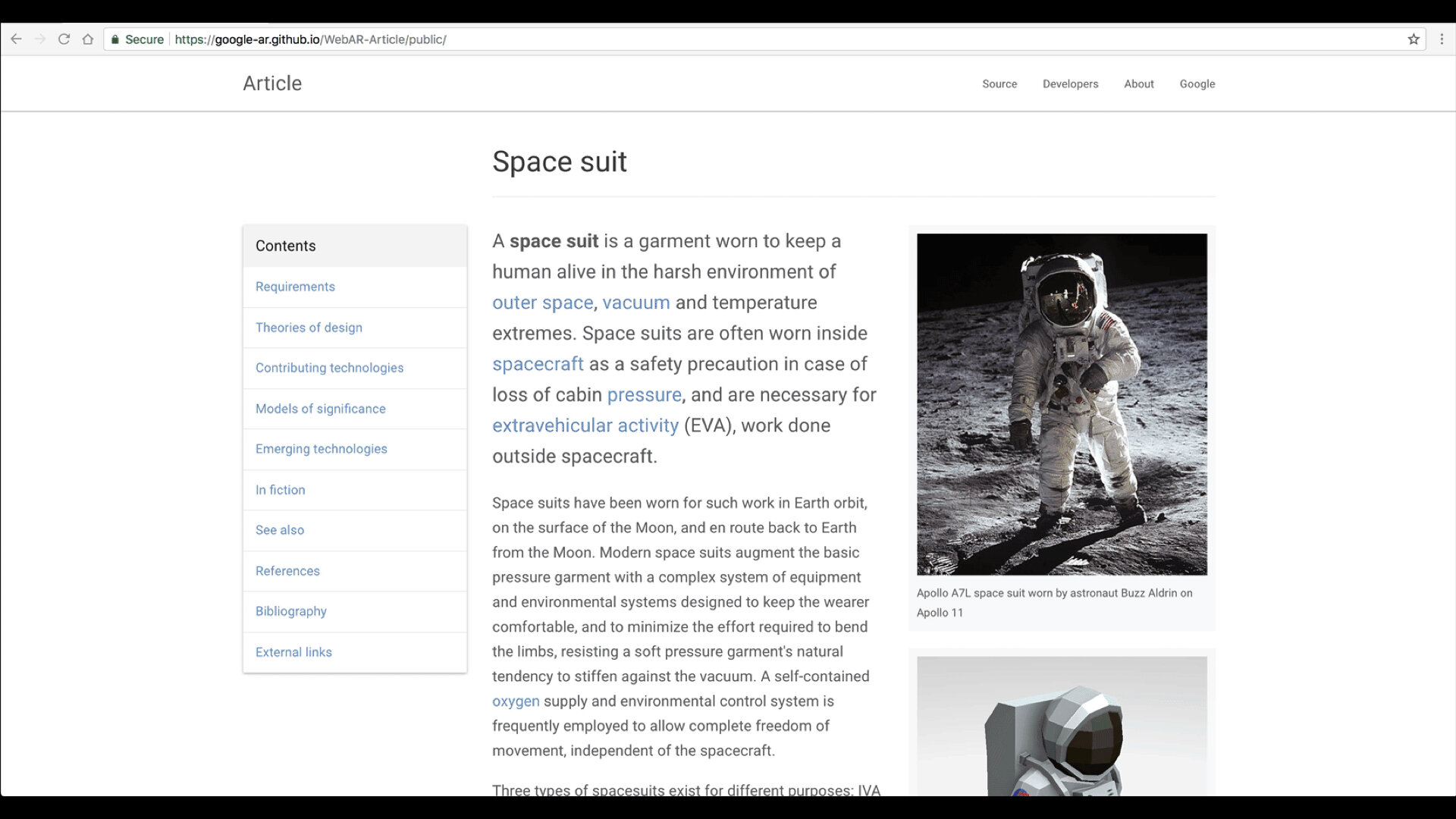

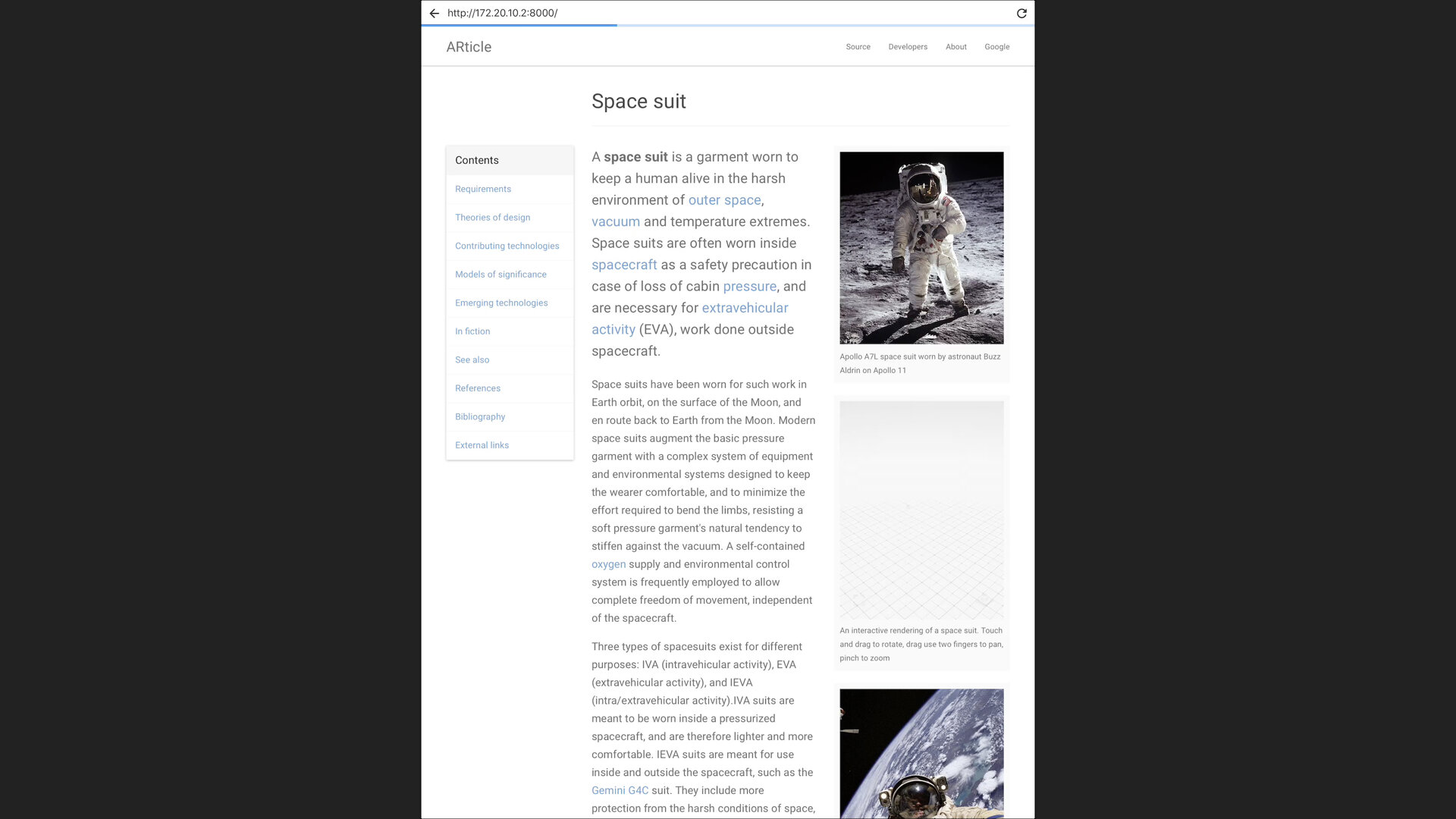

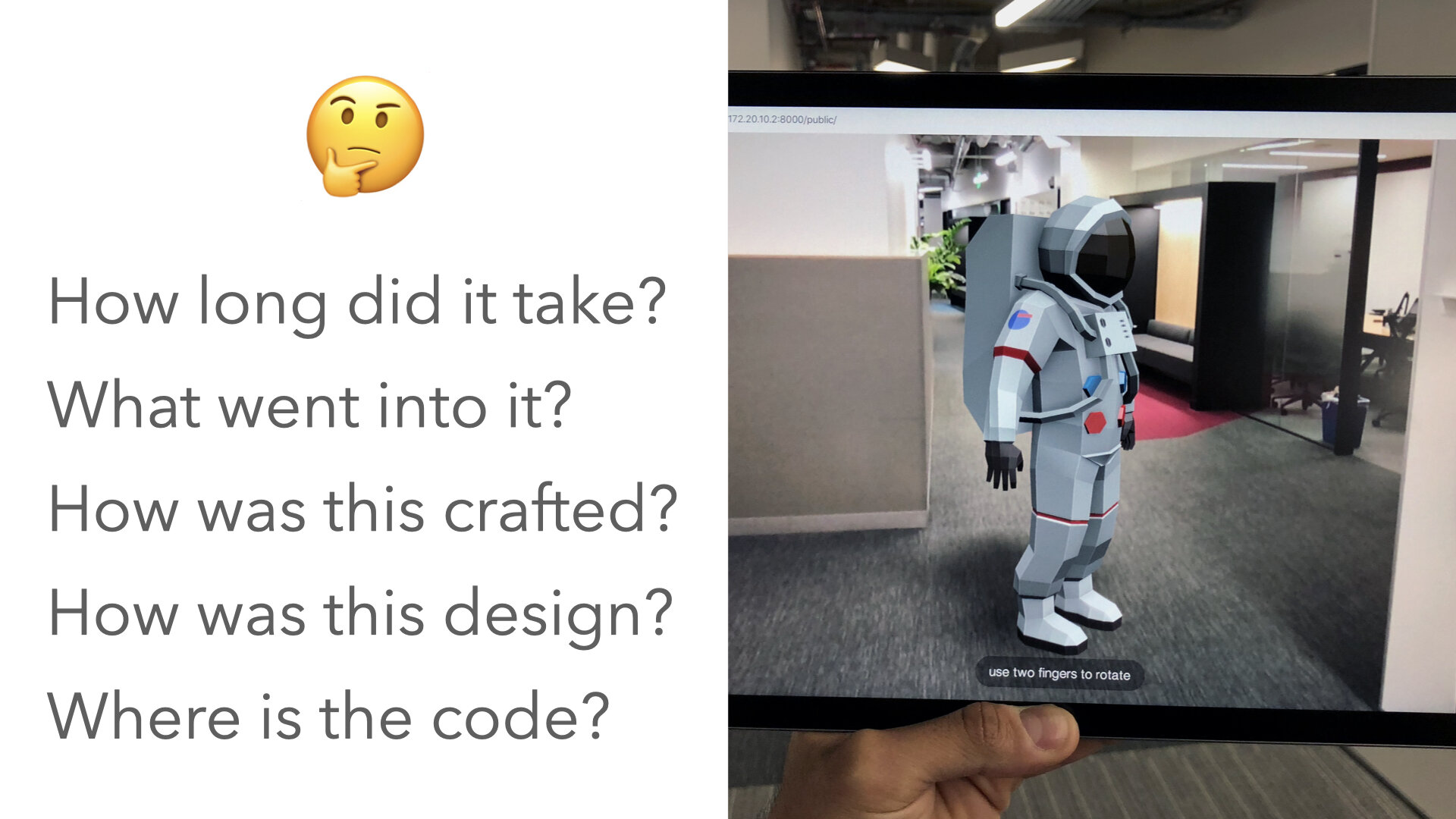

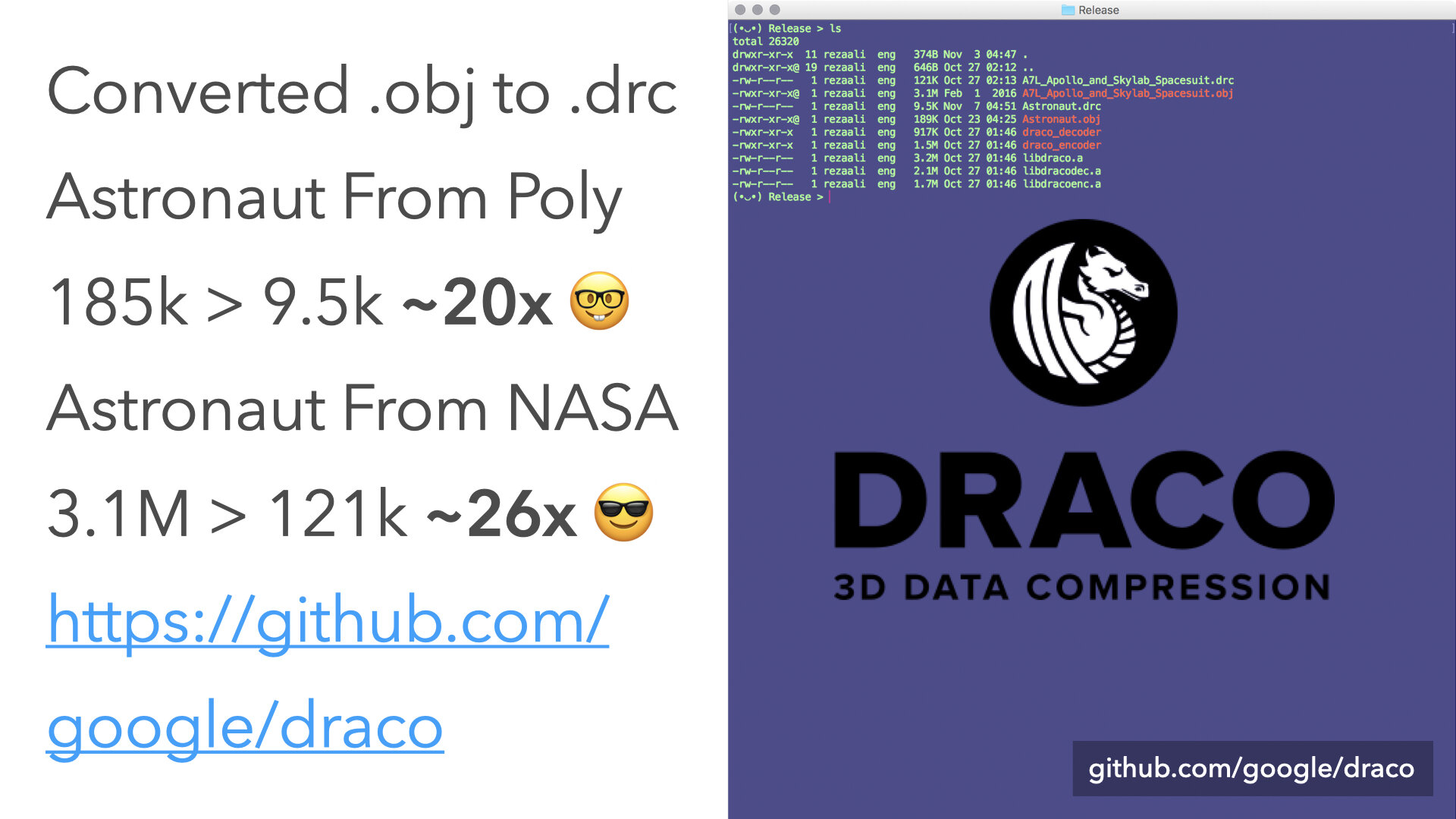

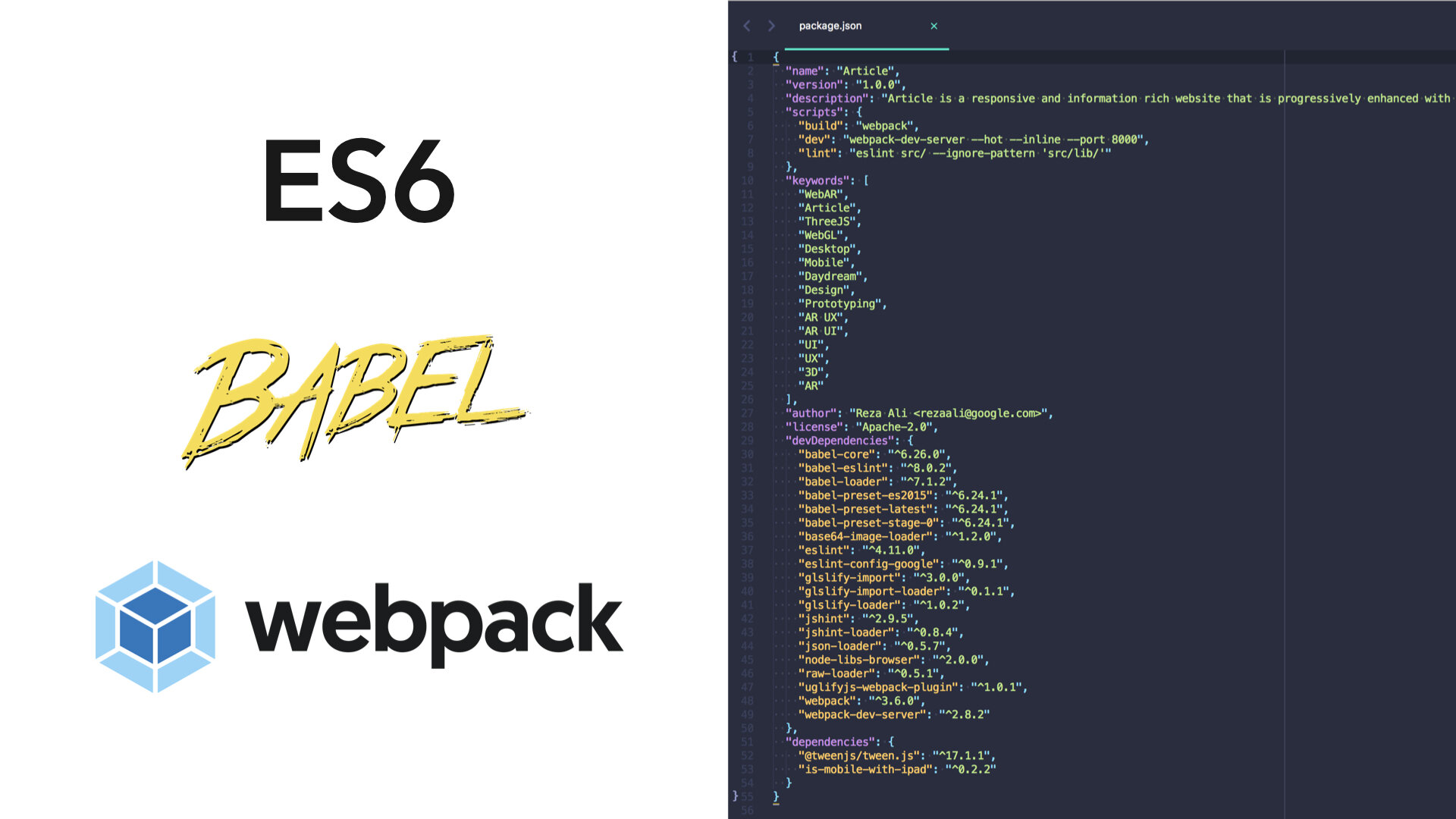

Given these tools, one of the first full experiences we open sourced and shared with the world was Article. There is a full detailed writeup by Josh Carpenter and myself here. This write up on Google’s blog contains tons of web based AR tips and tricks, so check it out if you are interested in learning about our design process, workflow, JS tooling and optimization techniques. This blog post introduced the WebAR API to developers, designers and the world. It created interest in the technology and showed how the web could be leveraged as the basic building block for the AR metaverse.

Towards the end of my time at Google I created documentation, helped to write two blog posts (1, 2) and created presentations for FITC Toronto 2018 & SIGGRAPH Asia 2018 on the state of WebXR API. These presentation covered best principles for designing & prototyping AR experiences on the web. The slides below sums up our learnings, explorations, and engineering optimizations. I left Google in June 2018 and moved to Los Angeles to live a more balanced life and drive deeper into real-time graphics programming (Metal) via a full-time contracting position at Apple.